The UI evolution with LLM interface

Intent is not all you need, process matters too

Hi! Welcome to the second of a three-part series on building AI products.

UI for AI products ← this one

Business models and pricing for AI products

Scroll to the bottom for more resources and related readings.

How much of your interactions with software today can be replaced with natural language?

I’m going to guess not a whole lot.

Chat interface requires users to have a specific intent and defined outcome to articulate it in natural language. But oftentimes people only discover their intent through the process of interacting with the software - through its graphical user interface. The chat interface won’t necessarily replace the current interfaces, but can augment the existing and create more dynamic product experiences.

The new UI paradigm with generative AI will be evolutionary, not revolutionary. Let’s explore:

Why the chat UI won’t completely displace GUI

2 waves of UIs for LLM powered interfaces:

Responsive: human initiated action, chat interface embedded to augment existing interface

Proactive: AI initiated action, task is automatically completed in the background with notification & review interface for human review

The challenges with a text box/chat UI

Too universal.

“You can ask it anything” is not always a good thing. Without any constraint, it’s too general and has a cold start challenge. A blank page puts the pressure on the user to describe what they want in natural language. Where do you begin? What do you ask? How do you articulate it to best encompass your intent?

Slow in some cases.

In some situations, natural language is a lot slower than some good ol’ buttons. A simple example: clicking on a name out of a list of names is a lot faster and intuitive than typing out that command. This becomes very obvious in complex design-type products where interacting with a GUI is simply way faster than having to “spell out” what you want to accomplish.

Missing immediate interactive feedback.

Oftentimes, people don’t start with a perfectly defined outcome. It’s through the process of direct manipulation with the software and getting immediate feedback step by step that they work out their goal. Getting feedback from the computer is critical to inform the next steps. Bret Victor’s infamous talk “Inventing on Principle” describes this idea best.

GUI helps users narrow down their intention with all the relevant options (UI components) visible. This is especially the case with complex design tools where hand-eye coordination is a critical part of the process. As such, LLM interface is unlikely to completely displace the typical interface, rather stack on top of them.

LLM UI wave 1: Responsive (human initiated)

We’re in wave 1 of AI products where the LLM interface is typically a textbox that acts as an assistant/agent within an application. The user initiates the ask, which isn’t very generalizable or common such that they could’ve be represented by buttons. This is perhaps what Clippy would’ve been in an alternate universe where it was created at the right time.

As an assistant augmenting an existing interface, it’s bound by the specific use case and context of the product, which makes it a lot more approachable than a blank page. A few components to this responsive interface:

Prompt templates (grimoires) that encode knowledge and expertise in ways that can be reused.

A feedback mechanism to accept or iterate with the generated output.

Some popular examples:

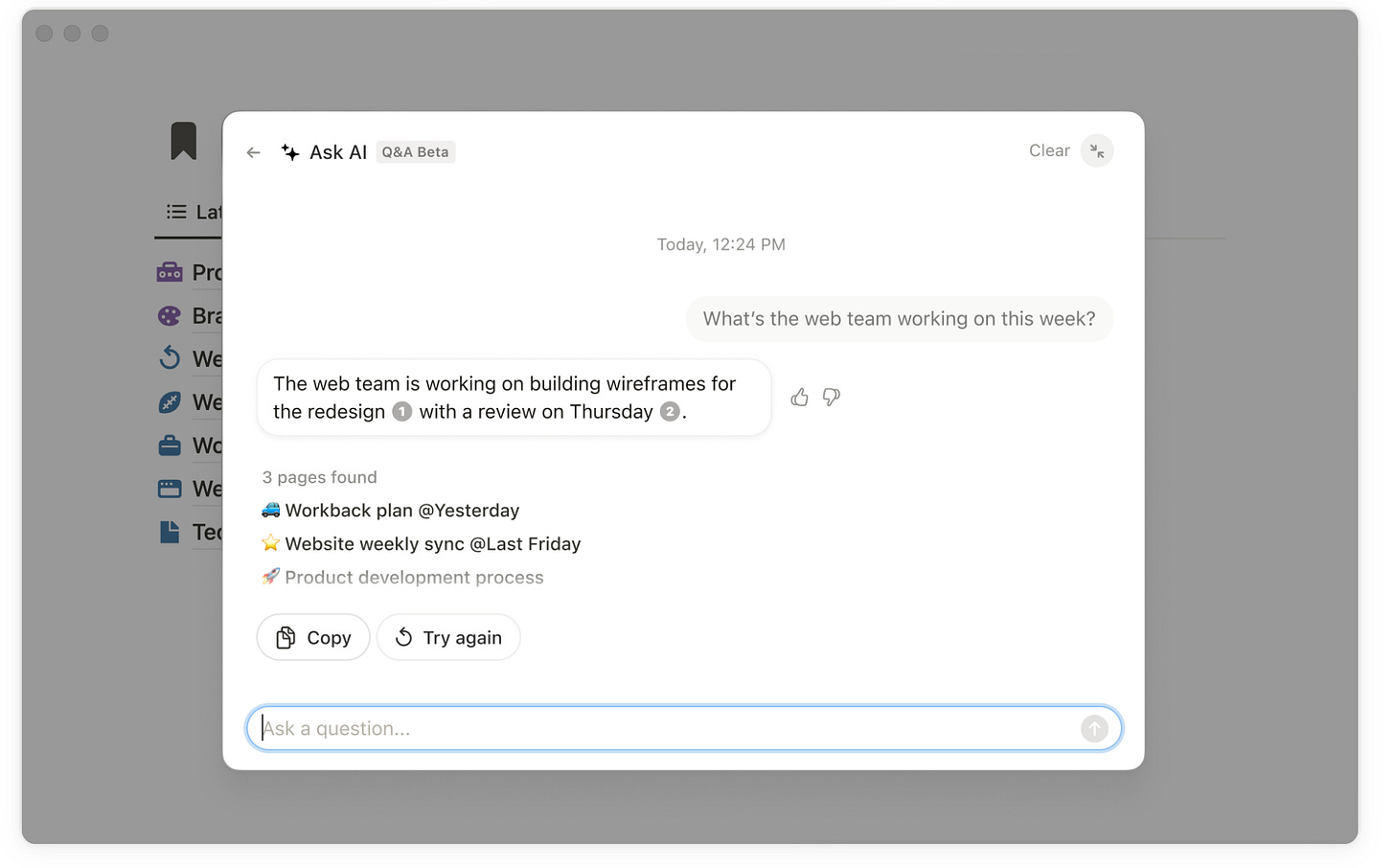

Notion Q&A - search for answers in a large, disorganized knowledge base.

FigJam AI - generate a whiteboard

Miro Assist - generate a presentation or summary from sticky notes

LLM UI wave 2: Proactive (AI initiated)

As the models become more sophisticated, there’d be a shift from human initiated tasks to AI proactively initiating tasks in the background. The output would need to have clear indication that it’s AI generated, with a mechanism for the user to review. In creative tasks, the conversation interface could turn into a way to iterate with the AI, for example, providing specific adjustment instructions to the generated output to further refine to the desired outcome.

Possible components to this proactive interface:

Notification of completed tasks for awareness and review.

Dynamically generate UI to adapt to user’s intent. AI can analyze user’s behaviour and proactively generate UI that helps user get closer their desired outcome.

If you could get AI to start to suggest interface elements to people and do that in a way that actually makes sense, I think that could unlock a whole new era of design in terms of creating contextual designs, designs that are responsive to what the user’s intent is at that moment.

- Dylan Field (Figma)

One interesting example I’ve seen is Brex Assistant. It automatically generates receipts and crafts contextually aware memos. The marketing message is “no action required”.

What does this all mean?

Despite ChatGPT’s popularity, the LLM chat interface is not a panacea that’ll replace all other interfaces. It comes down to, does the process of iteration matter more or the speed to generating any output?

If there’s a specific desired outcome, a chat interface can be useful to carry out the instruction

But if the direct manipulation with components is required to arrive at the desired outcome/intent, then GUIs will still provide a better feedback mechanism.

New input devices don’t kill their predecessors, they stack on top of them. Voice won’t kill touchscreens. Touchscreens didn’t kill the mouse. The mouse didn’t kill the command line. Analysts yearn for a simple narrative where the birth of every new technology instantly heralds the death of the previous one, but interfaces are inherently multimodal.

- Des Traynor (Intercom)

Certainly, new interfaces such as AI generated suggestions being embedded within the existing UI would be exciting. The ultimate goal has always been to help users translate their intention into their desired outcome.

What are your predictions about how AI will evolve user interfaces? What new interfaces are you experimenting with when incorporating AI in your product?

🔗 Interesting reads

AI: first new UI paradigm in 60 years. “UI paradigm 3: Intent-Based Outcome Specification. Clicking or tapping things on a screen is an intuitive and essential aspect of user interaction that should not be overlooked. Thus, the second UI paradigm will survive, albeit in a less dominant role. Future AI systems will likely have a hybrid user interface that combines elements of both intent-based and command-based interfaces while still retaining many GUI elements.”

Language Model UXes in 2027. …“interactivity as part of the LLM’s response, such that instead of sending me text, I could directly be presented with the UX I need to carry out any parts of the task that the LLM-based system couldn’t handle internally.”

Unbundling AI. “Whenever we get a new tool, we start by forcing it to fit our existing ways of working, and then over time we change the work to fit the new tool. We try to treat ChatGPT as though it was Google or a database instead of asking what it is useful for. How can we change the work to take advantage of this?”

LLM Powered Assistants for Complex Interfaces. “While I do not dispute that LLM based chat interfaces are incredibly powerful, I do not think they will necessarily wipe out all other forms of interface design. If anything, I think that they have a powerful role to play in augmenting any type of interface, particularly ones that are complex - by making them easier to use and navigate for new users.”

5 Ways AI Will Transform Creativity with Adobe’s Scott Belsky. “Creativity is the new productivity. Creative professionals aren’t going anywhere. They’ll just have more time to develop quality solutions and spend less time painstakingly doing tasks that take AI seconds to achieve.”